The ‘Dark’ side of AI: Algorithm Bias, influenced decision making.. Defining AI Ethics Strategy

Add Your Heading Text Here

According to a 2019 report from the Centre for the Governance of AI at the University of Oxford, 82% of Americans believe that robots and AI should be carefully managed. Concerns cited ranged from how AI is used in surveillance and in spreading fake content online (known as deep fakes when they include doctored video images and audio generated with help from AI) to cyber attacks, infringements on data privacy, hiring bias, autonomous vehicles, and drones that don’t require a human controller.

What happens when injustices are propagated not by individuals or organizations but by a collection of machines? Lately, there’s been increased attention on the downsides of artificial intelligence and the harms it may produce in our society, from unequitable access to opportunities to the escalation of polarization in our communities. Not surprisingly, there’s been a corresponding rise in discussion around how to regulate AI.

AI has already shown itself very publicly to be capable of bad biases — which can lead to unfair decisions based on attributes that are protected by law. There can be bias in the data inputs, which can be poorly selected, outdated, or skewed in ways that embody our own historical societal prejudices. Most deployed AI systems do not yet embed methods to put data sets to a fairness test or otherwise compensate for problems in the raw material.

There also can be bias in the algorithms themselves and in what features they deem important (or not). For example, companies may vary their product prices based on information about shopping behaviors. If this information ends up being directly correlated to gender or race, then AI is making decisions that could result in a PR nightmare, not to mention legal trouble. As these AI systems scale in use, they amplify any unfairness in them. The decisions these systems output, and which people then comply with, can eventually propagate to the point that biases become global truth.

The unrest on bringing AI Ethics

Of course, individual companies are also weighing in on what kinds of ethical frameworks they will operate under. Microsoft president Brad Smith has written about the need for public regulation and corporate responsibility around facial recognition technology. Google established an AI ethics advisory council board. Earlier this year, Amazon started a collaboration with the National Science While we have yet to reach certain conclusions around tech regulations, the last three years have seen a sharp increase in forums and channels to discuss governance. In the U.S., the Obama administration issued a report in 2016 on preparing for the future of artificial intelligence after holding public workshops examining AI, law, and governance; AI technology, safety, and control; and even the social and economic impacts of AI. The Institute of Electrical and Electronics Engineers (IEEE), an engineering, computing, and technology professional organization that establishes standards for maximizing the reliability of products, put together a crowdsourced global treatise on ethics of autonomous and intelligent systems. In the academic world, the MIT Media Lab and Harvard University established a $27 million initiative on ethics and governance of AI, Stanford is amid a 100-year study of AI, and Carnegie Mellon University established a centre to explore AI ethics.

Corporations are forming their own consortiums to join the conversation. The Partnership on AI to Benefit People and Society was founded by a group of AI researchers representing six of the world’s largest technology companies: Apple, Amazon, DeepMind/Google, Facebook, IBM, and Microsoft. It was established to frame best practices for AI, including constructs for fair, transparent, and accountable AI. It now has more than 80 partner companies. Foundation to fund research to accelerate fairness in AI — although some immediately questioned the potential conflict of interest of having research funded by such a giant player in the field.

Are data regulations around the corner?

There is a need to develop a global perspective on AI ethics, Different societies around the world have very different perspectives on privacy and ethics. Within Europe, for example, U.K. citizens are willing to tolerate video camera monitoring on London’s central High Street, perhaps because of IRA bombings of the past, while Germans are much more privacy oriented, influenced by the former intrusions of East German Stasi spies , in China, the public is tolerant of AI-driven applications like facial recognition and social credit scores at least in part because social order is a key tenet of Confucian moral philosophy. Microsoft’s AI ethics research project involves ethnographic analysis of different cultures, gathered through close observation of behaviours, and advice from external academics such as Erin Meyer of INSEAD. Eventually, we could foresee that there will be a collection of policies about how to use AI and related technologies. Some have already emerged, from avoiding algorithmic bias to model transparency to specific applications like predictive policing.

The longer take is that although AI standards are not top of the line sought after work, they are critical for making AI not only more useful but also safe for consumer use. Given that AI is still young but quickly being embedded into every application that impacts our lives, we could envisage an array of AI ethics guidelines by several countries for AI that are expected to lead to mid- and long-term policy recommendations on AI-related challenges and opportunities.

Chief AI ethical officer on the cards?

As businesses pour resources into designing the next generation of tools and products powered by AI, people are not inclined to assume that these companies will automatically step up to the ethical and legal responsibilities if these systems go awry.

The time when enterprises could simply ask the world to trust artificial intelligence and AI-powered products is long gone. Trust around AI requires fairness, transparency, and accountability. But even AI researchers can’t agree on a single definition of fairness: There’s always a question of who is in the affected groups and what metrics should be used to evaluate, for instance, the impact of bias within the algorithms.

Since organizations have not figured out how to stem the tide of “bad” AI, their next best step is to be a contributor to the conversation. Denying that bad AI exists or fleeing from the discussion isn’t going to make the problem go away. Identifying CXOs who are willing to join in on the dialogue and finding individuals willing to help establish standards are the actions that organizations should be thinking about today. There comes the aspect of Chief AI ethical officer to evangelize, educate, ensure that enterprises are made aware of AI ethics and are bought into it.

When done correctly, AI can offer immeasurable good. It can provide educational interventions to maximize learning in underserved communities, improve health care based on its access to our personal data, and help people do their jobs better and more efficiently. Now is not the time to hinder progress. Instead, it’s the time for enterprises to make a concerted effort to ensure that the design and deployment of AI are fair, transparent, and accountable for all stakeholders — and to be a part of shaping the coming standards and regulations that will make AI work for all

Related Posts

AIQRATIONS

Building AI-enabled organisations

Add Your Heading Text Here

The adoption and benefit realisation from cognitive technologies is gaining increasing momentum. According to a PwC report, 72% of business executives surveyed believe that artificial intelligence (AI) will be a strong business advantage and 67% believe that a combination of human and machine intelligence is a more powerful entity than each one on its own.

Another survey conducted by Deloitte reports that on an average, 83% of respondents who have actively deployed AI in the enterprise see moderate to substantial benefits through AI – a number that goes further up with the number of AI deployments.

These studies make it abundantly clear that AI is occupying a high and increasing mindshare among business executives – who have a strong appreciation of the bottom line impact delivered by cognitive systems, through improved efficiencies.

AI-first Mindset

Having said that, with AI becoming more and more mainstream in an organisational setup, piecemeal implementations will deliver a lower marginal impact to organisations’ competitive advantage. While once early adopters were able to realise transformational benefits through siloed AI deployments, now that it is fast maturing as a must-have in the enterprise and we will need a different approach.

To realise true competitive advantage, organisations need to have an AI-first mindset. It is the new normal in accelerating business decisions. It was once said that every company is a technology company – meaning that all companies were expected to have mature technology backbones to deliver business impact and customer satisfaction. That dictum is now being amended to say – every company is a cognitive company.

To deliver on this promise, companies need to weave AI into the very fabric of their strategy. To realise competitive advantage tomorrow, we need to embed AI across the organisation today, with a strong, stable and scalable foundation. Here are three building blocks that are needed to create that robust foundation.

1. Enrich Data & Algorithm Repositories

If data is indeed the new oil (which it is), organisations that hold the deepest reserves and the most advanced refinery will be the ones that win in this new landscape. Companies having the most meaningful repository of data, along with fit-for-purpose proprietary algorithms will most likely enjoy a sizeable competitive advantage.

So, companies need to improve and re-invent their data generation and collection mechanisms. Data generation will help reduce their reliance on external data providers and help them own the data for conducting meaningful, real-time analysis by continuously enriching the data set.

Alongside, corporations also need to build an ‘algorithm factory’ – to speed up the development of accurate, fit-for-purpose and meaningful algorithms. The algorithm factory would need to push out data models in an iterative process in a way that improves the speed and accuracy.

This would enable the data and analysis capabilities of companies to grow in a scalable manner. While this task would largely fall under the aegis of data science teams, business teams would be required to provide timely interventions and feedback – to validate impact delivered by these models, and suggest course-corrections where necessary.

Another key aspect of this process is to enable a transparent cross-organisation view into these repositories. This will allow employees to collaborate and innovate rapidly by learning what is already been done and will reduce needless time and effort spent in developing something that’s already there.

2. AI Education for Workforce

Operationalising AI requires a convergence of different skill sets. According to the above-cited Deloitte survey, 37% of respondents felt that their managers didn’t understand cognitive technology – which was a hindrance to their AI deployments.

We need to mix different streams of people to build a scalable AI-centric organisation. For instance, business teams need to be continuously trained on the operational aspects of AI, its various types, use cases and benefits – to appreciate how AI can impact their area of business.

Technology teams need to be re-skilled around the development and deployment of AI applications. Data processing and analyst teams need to better understand how to build scalable computational models, which can run more autonomously and improve fast.

Unlike a typical technology transformation, AI transformation is a business reengineering exercise and requires cross-functional teams to collaborate and enrich their understanding of AI and how it impacts their functions, while building a scalable AI programme.

The implicit advantage of developing topical training programmes and involving a larger set of the workforce is to mitigate the FUD that is typically associated with automation initiatives. By giving employees the opportunity to learn and contribute in a meaningful way, we can eliminate bottlenecks, change-aversion and enable a successful AI transformation.

3. Ethical and Security Measures

The 4th Industrial Revolution will require a re-assessment of ethical and security practices around data, algorithms and applications that use the former two.

By introducing renewed standards and ethical codes, enterprises can address two important concerns people typically raise – how much power can/should AI exercise and how can we stay protected in cases of overreach.

We are already witnessing teething trouble – with accidents involving self-driving cars resulting in pedestrian deaths, and the continuing Facebook-Cambridge Analytica saga.

Building a strong grounding for AI systems will go a long way in improving customer and social confidence – that personal data is in safe hands and is protected from abuse – enabling them to provide an informed consent to their data. To that end, we need to continue refining our understanding around the ethical standards of AI implementations

AI and other cyber-physical systems are key components of the next generation of business. According to a report by semiconductor manufacturer, ARM, 61% of respondents believe that AI can make the world a better place. To increase that sentiment even further, and to make AI business-as-usual, and power the cognitive enterprise, it is critical that we subject machine intelligence to the same level of governance, scrutiny and ethical standards that we would apply to any core business process.

Related Posts

AIQRATIONS

How Rise of Exponential Technologies – AI, RPA, Blockchain, Cybersecurity will Redefine Talent Demand & Supply Landscape

Add Your Heading Text Here

The current boom of exponential technologies of today is causing strong disruption in the talent availability landscape, with traditional, more mechanical roles being wiped out and paving way for huge demand for learning and design thinking based skills and professions. The World Economic Forum said in 2016 that 60% of children entering school today will work in jobs that do not yet exist.

While there is a risk to jobs due to these trends, the good news is that a huge number of new jobs are getting created as well in areas like AI, Machine Learning, Robotic Process Automation (RPA), Blockchain, Cybersecurity, etc. It is clearly a time of career pivot for IT professionals to make sure they are where the growth is.

AI and Machine Learning upending the traditional IT Skill Requirement

AI and Machine Learning will create a new demand for skills to guide its growth and development. These emerging areas of expertise will likely be technical or knowledge-intensive fields. In the near term, the competition for workers in these areas may change how companies focus their talent strategies.

At a time when the demand for data scientists and engineers will grow 39% by 2020, employers are seeking out leaders who can effectively work with technologists to ask the right questions and apply the insight to solve business problems. The business schools are, hence, launching more programs to equip graduates with the skills they need to succeed. Toronto’s Rotman School of Management, for example, last week launched a nine-month program which provides recent college graduates with advanced data management, analytical and communication skills.

According to the Organization of Economic Cooperation and Development, only 5-10% of labor would be displaced by intelligent automation, and new job creation will offset losses.

The future will increase the value of workers with a strong learning ability and strength in human interaction. On the other hand, today’s highly paid, experienced, and skilled knowledge workers may be at risk of losing their jobs to automation.

Many occupations that might appear to require experience and judgment — such as commodity traders — are being outdone by increasingly sophisticated machine-learning programs capable of quickly teasing subtle patterns out of large volumes of data. If your job involves distracting a patient while delivering an injection, guessing whether a crying baby wants a bottle or a diaper change, or expressing sympathy to calm an irate customer, you needn’t worry that a robot will take your job, at least for the foreseeable future.

Ironically, the best qualities for tomorrow’s worker may be the strengths usually associated with children. Learning has been at the centre of the new revival of AI. But the best learners in the universe, by far, are still human children. At first, it was thought that the quintessential preoccupations of the officially smart few, like playing chess or proving theorems — the corridas of nerd machismo — would prove to be hardest for computers. In fact, they turn out to be easy. Things every dummy can do like recognizing objects or picking them up are much harder. And it turns out to be much easier to simulate the reasoning of a highly trained adult expert than to mimic the ordinary learning of every baby. The emphasis on learning is a key change from previous decades and rounds of automation.

According to Pew Research, 47% of all employment opportunities will be occupied by machines within the next two decades.

What types of skills will be needed to fuel the development of AI over the next several years? These prospects include:

- Ethics: The only clear “new” job category is that of AI ethicist, a role that will manage the risks and liabilities associated with AI, as well as transparency requirements. Such a role might be imagined as a cross between a data scientist and a compliance officer.

- AI Training: Machine learning will require companies to invest in personnel capable of training AI models successfully, and then they must be able to manage their operations, requiring deep expertise in data science and an advanced business degree.

- Internet of Things (IoT): Strong demand is anticipated for individuals to support the emerging IoT, which will require electrical engineering, radio propagation, and network infrastructure skills at a minimum, plus specific skills related to AI and IoT.

- Data Science: Current shortages for data scientists and individuals with skills associated with human/machine parity will likely continue.

- Additional Skill Areas: Related to emerging fields of expertise are a number of specific skills, many of which overlap various fields of expertise. Examples of potentially high-demand skills include modeling, computational intelligence, machine learning, mathematics, psychology, linguistics, and neuroscience.

In addition to its effect on traditional knowledge workers and skilled positions, AI may influence another aspect of the workplace: gender diversity. Men hold 97 percent of the 2.5 million U.S. construction and carpentry jobs. These male workers stand more than a 70 percent chance of being replaced by robotic workers. By contrast, women hold 93 percent of the registered nurse positions. Their risk of obsolescence is vanishingly small: .009 percent.

RPA disrupting the traditional computing jobs significantly

RPA is not true AI. RPA uses traditional computing technology to drive its decisions and responses, but it does this on a scale large and fast enough to roughly mimic the human perspective. AI, on the other hand, applies machine and deep learning capabilities to go beyond massive computing to understand, learn, and advance its competency without human direction or intervention — a truly intelligent capability. RPA is delivering more near-term impact, but the future may be shaped by more advanced applications of true AI.

In 2016, a KPMG study estimated that 100 million global knowledge workers could be affected by robotic process automation by 2025.

The first reaction would be that in the back office and the middle office, all those roles which are currently handling repetitive tasks would become redundant. 47% of all American job functions could be automated within 20 years, according to the Oxford Martin School on Economics in a 2013 report.

Indeed, India’s IT services industry is set to lose 6.4 lakh low-skilled positions to automation by 2021, according to U.S.-based HfS Research. It said this was mainly because there were a large number of non-customer facing roles at the low-skill level in countries like India, with a significant amount of “back office” processing and IT support work likely to be automated and consolidated across a smaller number of workers.

Automation threatens 69% of the jobs in India, while it’s 77% in China, according to a World Bank research.

Job displacement would be the eventual outcome however, there would be several other situations and dimensions which need to be factored. Effective automation with the help of AI should create new roles and new opportunities hitherto not experienced. Those who currently possess traditional programming skills have to rapidly acquire new capabilities in machine learning, develop understanding of RPA and its integration with multiple systems. Unlike traditional IT applications, planning and implementation could be done in small patches in shorter span of time and therefore software developers have to reorient themselves.

For those entering into the workforce for the first time, there would be a demand for talent with traditional programming skills along with the skills for developing RPA frameworks or for customising the frameworks. For those entering the workforce for being part of the business process outsourcing functions, it would be important to develop capability in data interpretation and analysis as increasingly more recruitment at the entry level would be for such skills and not just for their communication or transaction handling skills.

Blockchain – A blue ocean of a New kind of Financial Industry Skillset

A technology as revolutionary as blockchain will undoubtedly have a major impact on the financial services landscape. Many herald blockchain for its potential to demystify the complex financial services industry, while also reducing costs, improving transparency to reduce the regulatory burden on the industry. But despite its potential role as a precursor to extend financial services to the unbanked, many fear that its effect on the industry may have more cons than pros.

30–60% of jobs could be rendered redundant by the simple fact that people are able to share data securely with a common record, using Blockchain

Industries including payments, banking, security and more will all feel the impact of the growing adoption of this technology. Jobs potentially in jeopardy include those involving tasks such as processing and reconciling transactions and verifying documentation. Profit centers that leverage financial inefficiencies will be stressed. Companies will lose their value proposition and a loss of sustainable jobs will follow. The introduction of blockchain to the finance industry is similar to the effect of robotics in manufacturing: change in the way we do things, leading to fewer jobs, is inevitable.

Nevertheless, the nature of such jobs is likely to evolve. While Blockchain creates an immutable record that is resistant to tampering, fraud may still occur at any stage in the process but will be captured in the record and there easily detected. This is where we can predict new job opportunities. There could be a whole class of professions around encryption and identity protection.

So far, the number of jobs created by the industry appears to exceed the number of available professionals qualified to fill them, but some aren’t satisfied this trend will continue. Still, the study of the potential impact of blockchain tech on jobs has been largely qualitative to date. Aite Group released a report that found the largest employers in the blockchain industry each employ about 100 people.

Related Posts

AIQRATIONS

Ethics and Ethos in Analytics – Why is it Imperative for Enterprises to Keep Winning the Trust from Customers?

Add Your Heading Text Here

Data Sciences and analytics technology can reap huge benefits to both individuals and organizations – bringing personalized service, detection of fraud and abuse, efficient use of resources and prevention of failure or accident. So why are there questions being raised about the ethics of analytics, and its superset, Data Sciences?

Ethical Business Processes in Analytics Industry

At its core, an organization is “just people” and so are its customers and stakeholders. It will be individuals who choose what to organization does or does not do and individuals who will judge its appropriateness. As an individual, our perspective is formed from our experience and the opinions of those we respect. Not surprisingly, different people will have different opinions on what is appropriate use of Data Sciences and analytics technology particularly – so who decides which is “right”? Customers and stakeholders may have different opinions on to the organization about what is ethical.

This suggests that organizations should be thoughtful in their use of this Analytics; consulting widely and forming policies that record the decisions and conclusions they have come to. They will consider the wider implications of their activities including:

Context – For what purpose was the data originally surrendered? For what purpose is the data now being used? How far removed from the original context is its new use? Is this appropriate?

Consent & Choice – What are the choices given to an affected party? Do they know they are making a choice? Do they really understand what they are agreeing to? Do they really have an opportunity to decline? What alternatives are offered?

Reasonable – Is the depth and breadth of the data used and the relationships derived reasonable for the application it is used for?

Substantiated – Are the sources of data used appropriate, authoritative, complete and timely for the application?

Owned – Who owns the resulting insight? What are their responsibilities towards it in terms of its protection and the obligation to act?

Fair – How equitable are the results of the application to all parties? Is everyone properly compensated? Considered – What are the consequences of the data collection and analysis?

Access – What access to data is given to the data subject?

Accountable – How are mistakes and unintended consequences detected and repaired? Can the interested parties check the results that affect them?

Together these facets are called the ethical awareness framework. This framework was developed by the UK and Ireland Technical Consultancy Group (TCG) to help people to develop ethical policies for their use of analytics and Data Sciences. Examples of good and bad practices are emerging in the industry and in time they will guide regulation and legislation. The choices we make, as practitioners will ultimately determine the level of legislation imposed around the technology and our subsequent freedom to pioneer in this exciting emerging technical area.

Designing Digital Business for Transparency and Trust

With the explosion of digital technologies, companies are sweeping up vast quantities of data about consumers’ activities, both online and off. Feeding this trend are new smart, connected products—from fitness trackers to home systems—that gather and transmit detailed information.

Though some companies are open about their data practices, most prefer to keep consumers in the dark, choose control over sharing, and ask for forgiveness rather than permission. It’s also not unusual for companies to quietly collect personal data they have no immediate use for, reasoning that it might be valuable someday.

In a future in which customer data will be a growing source of competitive advantage, gaining consumers’ confidence will be key. Companies that are transparent about the information they gather, give customers control of their personal data, and offer fair value in return for it will be trusted and will earn ongoing and even expanded access. Those that conceal how they use personal data and fail to provide value for it stand to lose customers’ goodwill—and their business.

At the same time, consumers appreciate that data sharing can lead to products and services that make their lives easier and more entertaining, educate them, and save them money. Neither companies nor their customers want to turn back the clock on these technologies—and indeed the development and adoption of products that leverage personal data continue to soar. The consultancy Gartner estimates that nearly 5 billion connected “things” will be in use in 2015—up 30% from 2014—and that the number will quintuple by 2020.

Resolving this tension will require companies and policy makers to move the data privacy discussion beyond advertising use and the simplistic notion that aggressive data collection is bad. We believe the answer is more nuanced guidance—specifically, guidelines that align the interests of companies and their customers, and ensure that both parties benefit from personal data collection

Understanding the “Privacy Sensitiveness” of Customer Data

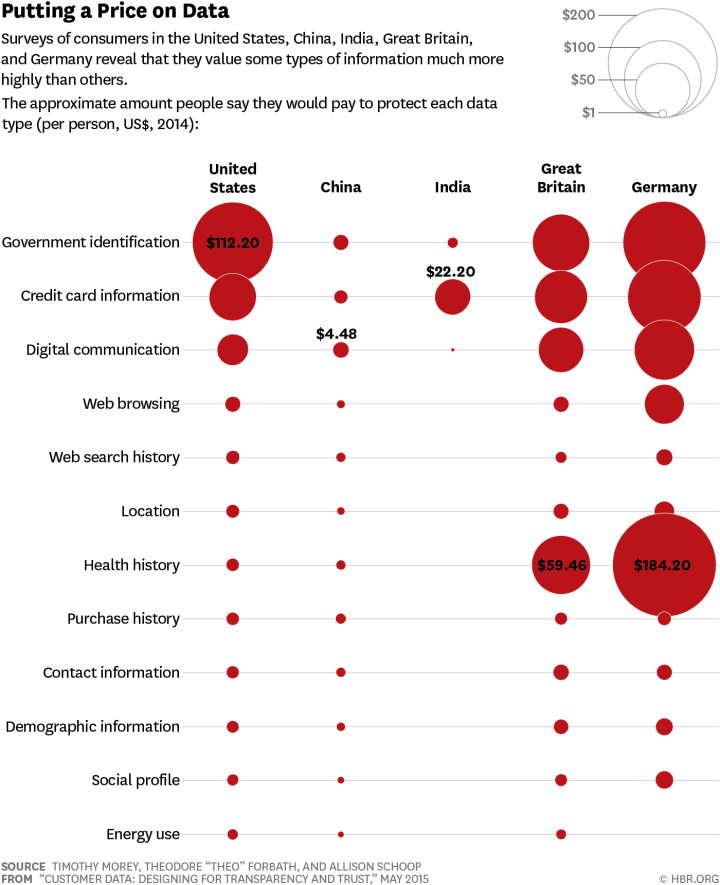

Keeping track of the “privacy sensitiveness” of customer data is also crucial as data and its privacy are not perfectly black and white. Some forms of data tend to be more crucial for customers to protect and maintained private. To see how much consumers valued their data , a conjoint analysis was performed to determine what amount survey participants would be willing to pay to protect different types of information. Though the value assigned varied widely among individuals, we are able to determine, in effect, a median, by country, for each data type.

The responses revealed significant differences from country to country and from one type of data to another. Germans, for instance, place the most value on their personal data, and Chinese and Indians the least, with British and American respondents falling in the middle. Government identification, health, and credit card information tended to be the most highly valued across countries, and location and demographic information among the least.

This spectrum doesn’t represents a “maturity model,” in which attitudes in a country predictably shift in a given direction over time (say, from less privacy conscious to more). Rather, our findings reflect fundamental dissimilarities among cultures. The cultures of India and China, for example, are considered more hierarchical and collectivist, while Germany, the United States, and the United Kingdom are more individualistic, which may account for their citizens’ stronger feelings about personal information.

Adopting Data Aggregation Paradigm for Protecting Privacy

If companies want to protect their users and data they need to be sure to only collect what’s truly necessary. An abundance of data doesn’t necessarily mean that there is an abundance of useable data. Keeping data collection concise and deliberate is key. Relevant data must be held in high regard in order to protect privacy.

It’s also important to keep data aggregated in order to protect privacy and instill transparency. Algorithms are currently being used for everything from machine thinking and autonomous cars, to data science and predictive analytics. The algorithms used for data collection allow companies to see very specific patterns and behavior in consumers all while keeping their identities safe.

One way companies can harness this power while heeding privacy worries is to aggregate their data…if the data shows 50 people following a particular shopping pattern, stop there and act on that data rather than mining further and potentially exposing individual behavior.

Things are getting very interesting…Google, Facebook, Amazon, and Microsoft take the most private information and also have the most responsibility. Because they understand data so well, companies like Google typically have the strongest parameters in place for analyzing and protecting the data they collect.

Finally, Analyze the Analysts

Analytics will increasingly play a significant role in the integrated and global industries today, where individual decisions of analytics professionals may impact the decision making at the highest levels unimagined years ago. There’s a substantial risk at hand in case of a wrong, misjudged model / analysis / statistics that can jeopardize the proper functioning of an organization.

Instruction, rules and supervisions are essential but that alone cannot prevent lapses. Given all this, it is imperative that Ethics should be deeply ingrained in the analytics curriculum today. I believe, that some of the tenets of this code of ethics and standards in analytics and data science should be:

- These ethical benchmarks should be regardless of job title, cultural differences, or local laws.

- Places integrity of analytics profession above own interests

- Maintains governance & standards mechanism that data scientists adhere to

- Maintain and develop professional competence

- Top managers create a strong culture of analytics ethics at their firms, which must filter throughout their entire analytics organization

Related Posts

AIQRATIONS

Can AI and Eternal Humanity both Co-exist

Add Your Heading Text Here

At the turn of the century, it’s likely few, if any, could anticipate the many ways artificial intelligence would later affect our lives.

Take Emotional Robot with Intelligent Network, or ERWIN, for example. He’s designed to mimic human emotions like sadness and happiness in order to help researchers understand how empathy affects human-robot connections. When ERWIN works with Keepon—a robot who looks eerily similar to a real person—scientists gather data on how emotional responses and body language can foster meaningful relationships in an inevitably droid-filled society. Increasingly, robots are integrating into our lives as laborers, therapeutic and medical tools, assistants and more.

While some predict mass unemployment or all-out war between humans and artificial intelligence, others foresee a less bleak future.

The Machine-Man Coexistence

Professor Manuela Veloso, head of the machine learning department at Carnegie Mellon University, is already testing out the idea on the CMU campus, building roving, segway-shaped robots called “cobots” to autonomously escort guests from building to building and ask for human help when they fall short. It’s a new way to think about artificial intelligence, and one that could have profound consequences in the next five years.

There will be a co-existence between humans and artificial intelligence systems that will be hopefully of service to humanity. These AI systems will involve software systems that handle the digital world, and also systems that move around in physical space, like drones, and robots, and autonomous cars, and also systems that process the physical space, like the Internet of Things.

You will have more intelligent systems in the physical world, too — not just on your cell phone or computer, but physically present around us, processing and sensing information about the physical world and helping us with decisions that include knowing a lot about features of the physical world. As time goes by, we’ll also see these AI systems having an impact on broader problems in society: managing traffic in a big city, for instance; making complex predictions about the climate; supporting humans in the big decisions they have to make.

Digital – The Ultimate Catalyst to Accelerate AI

A lot of [AI] research in the early days was actually acquiring [that] knowledge. We would have to ask humans. We would have to go to books and manually enter that information into the computer.

in the last few years, more and more of this information is digital. It seems that the world reveals itself on the internet. So AI systems are now about the data that’s available and the ability to process that data and make sense of it, and we’re still figuring out the best ways to do that. On the other hand, we are very optimistic because we know that the data is there.

The question now becomes, how do we learn from it? How do you use it? How do you represent it? How do you study the distributions — the statistics of the data? How do you put all these pieces together? That’s how you get deep learning and deep reinforcement learning and systems that do automatic translation and robots that play soccer. All these things are possible because we can process all this data so much more effectively and we don’t have to take the enormous step of acquiring that knowledge and representing it. It’s there.

Rules of Coexistence

As of late, discussions have run rampant about the impact of intelligent systems on the nature of work, jobs and the economy. Whether it is self-driving cars, automated warehouses, intelligent advisory systems, or interactive systems supported by deep learning, these technologies are rumored to first take our jobs and eventually run the world.

There are many points of view with regard to this issue, all aimed at defining our role in a world of highly intelligent machines but also aggressively denying the truth of the world to come. Below are the critical arguments of how we’ll coexist with machines in the future:

Machines Take Our Jobs, New Jobs Are Created

Some arguments are driven by the historical observation that every new piece of technology has both destroyed and created jobs. The cotton gin automated the cleaning of cotton. This meant that people no longer had to do the work because a machine enabled the massive growth of cotton production, which shifted the work to cotton picking. For nearly every piece of technology, from the steam engine to the word processor, the argument is that as some jobs were destroyed, others were created.

Machines Only Take Some Of Our Jobs

A variant of the first argument is that even if new jobs are not created, people will shift their focus to those aspects of work that intelligent systems are not equipped to handle. This includes areas requiring the creativity, insight and personal communication that are hallmarks of human abilities, and ones that machines simply do not possess. The driving logic is that there are certain human skills that a machine will never be able to master.

A similar, but more nuanced argument portrays a vision of man-machine partnerships in which the analytical power of a machine augments the more intuitive and emotional skills of the human. Or, depending on how much you value one over the other, human intuition will augment a machine’s cold calculations.

Machines Take Our Jobs, We Design New Machines

Finally, there is the view that as intelligent machines do more and more of the work, we will need more and more people to develop the next generation of those machines. Supported by historical parallels (i.e. cars created the need for mechanics and automobile designers), the argument is that we will always need someone working on the next generation of technology. This is a particularly presumptuous position as it is essentially technologists arguing that while machines will do many things, they will never be able to do what technologists do.

But Could Coexistence Exist Eternally?

These are all reasonable arguments above, and each one has its merits. But they are all based on the same assumption: Machines will never be able to do everything that people can do, because there will always be gaps in a machine’s ability to reason, be creative or intuitive. Machines will never have empathy or emotion, nor have the ability to make decisions or be consciously aware of themselves in a way that could drive introspection.

These assumptions have existed since the earliest days of A.I. They tend to go unquestioned simply because we prefer to live in a world in which machines cannot be our equals, and we maintain control over those aspects of cognition that, to this point at least, make us unique.

But the reality is that from consciousness to intuition to emotion, there is no reason to believe that any one of them will hold. It is quite conclusive that the only alternative to the belief that human thought can be modeled on a machine is to believe that our minds are the product of “magic.” Either we are part of the world of causation or we are not. If we are, A.I. is possible.