Managing Bias in AI: Strategic Risk Management Strategy for Banks

Add Your Heading Text Here

AI is set to transform the banking industry, using vast amounts of data to build models that improve decision making, tailor services, and improve risk management. According to the EIU, this could generate value of more than $250 billion in the banking industry. But there is a downside, since ML models amplify some elements of model risk. And although many banks, particularly those operating in jurisdictions with stringent regulatory requirements, have validation frameworks and practices in place to assess and mitigate the risks associated with traditional models, these are often insufficient to deal with the risks associated with machine-learning models. The added risk brought on by the complexity of algorithmic models can be mitigated by making well-targeted modifications to existing validation frameworks.

Conscious of the problem, many banks are proceeding cautiously, restricting the use of ML models to low-risk applications, such as digital marketing. Their caution is understandable given the potential financial, reputational, and regulatory risks. Banks could, for example, find themselves in violation of anti discrimination laws, and incur significant fines—a concern that pushed one bank to ban its HR department from using a machine-learning resume screener. A better approach, however, and ultimately the only sustainable one if banks are to reap the full benefits of machine-learning models, is to enhance model-risk management.

Regulators have not issued specific instructions on how to do this. In the United States, they have stipulated that banks are responsible for ensuring that risks associated with machine-learning models are appropriately managed, while stating that existing regulatory guidelines, such as the Federal Reserve’s “Guidance on Model Risk Management” (SR11-7), are broad enough to serve as a guide. Enhancing model-risk management to address the risks of machine-learning models will require policy decisions on what to include in a model inventory, as well as determining risk appetite, risk tiering, roles and responsibilities, and model life-cycle controls, not to mention the associated model-validation practices. The good news is that many banks will not need entirely new model-validation frameworks. Existing ones can be fitted for purpose with some well-targeted enhancements.

New Risk mitigation exercises for ML models

There is no shortage of news headlines revealing the unintended consequences of new machine-learning models. Algorithms that created a negative feedback loop were blamed for the “flash crash” of the British pound by 6 percent in 2016, for example, and it was reported that a self-driving car tragically failed to properly identify a pedestrian walking her bicycle across the street. The cause of the risks that materialized in these machine-learning models is the same as the cause of the amplified risks that exist in all machine-learning models, whatever the industry and application: increased model complexity. Machine-learning models typically act on vastly larger data sets, including unstructured data such as natural language, images, and speech. The algorithms are typically far more complex than their statistical counterparts and often require design decisions to be made before the training process begins. And machine-learning models are built using new software packages and computing infrastructure that require more specialized skills. The response to such complexity does not have to be overly complex, however. If properly understood, the risks associated with machine-learning models can be managed within banks’ existing model-validation frameworks

Here are the strategic approaches for enterprises to ensure that that the specific risks associated with machine learning are addressed :

Demystification of “Black Boxes” : Machine-learning models have a reputation of being “black boxes.” Depending on the model’s architecture, the results it generates can be hard to understand or explain. One bank worked for months on a machine-learning product-recommendation engine designed to help relationship managers cross-sell. But because the managers could not explain the rationale behind the model’s recommendations, they disregarded them. They did not trust the model, which in this situation meant wasted effort and perhaps wasted opportunity. In other situations, acting upon (rather than ignoring) a model’s less-than-transparent recommendations could have serious adverse consequences.

The degree of demystification required is a policy decision for banks to make based on their risk appetite. They may choose to hold all machine-learning models to the same high standard of interpretability or to differentiate according to the model’s risk. In USA, models that determine whether to grant credit to applicants are covered by fair-lending laws. The models therefore must be able to produce clear reason codes for a refusal. On the other hand, banks might well decide that a machine-learning model’s recommendations to place a product advertisement on the mobile app of a given customer poses so little risk to the bank that understanding the model’s reasons for doing so is not important. Validators need also to ensure that models comply with the chosen policy. Fortunately, despite the black-box reputation of machine-learning models, significant progress has been made in recent years to help ensure their results are interpretable. A range of approaches can be used, based on the model class:

Linear and monotonic models (for example, linear-regression models): linear coefficients help reveal the dependence of a result on the output. Nonlinear and monotonic models, (for example, gradient-boosting models with monotonic constraint): restricting inputs so they have either a rising or falling relationship globally with the dependent variable simplifies the attribution of inputs to a prediction. Nonlinear and nonmonotonic (for example, unconstrained deep-learning models): methodologies such as local interpretable model-agnostic explanations or Shapley values help ensure local interpretability.

Bias : A model can be influenced by four main types of bias: sample, measurement, and algorithm bias, and bias against groups or classes of people. The latter two types, algorithmic bias and bias against people, can be amplified in machine-learning models. For example, the random-forest algorithm tends to favor inputs with more distinct values, a bias that elevates the risk of poor decisions. One bank developed a random-forest model to assess potential money-laundering activity and found that the model favored fields with a large number of categorical values, such as occupation, when fields with fewer categories, such as country, were better able to predict the risk of money laundering.

To address algorithmic bias, model-validation processes should be updated to ensure appropriate algorithms are selected in any given context. In some cases, such as random-forest feature selection, there are technical solutions. Another approach is to develop “challenger” models, using alternative algorithms to benchmark performance. To address bias against groups or classes of people, banks must first decide what constitutes fairness. Four definitions are commonly used, though which to choose may depend on the model’s use: Demographic blindness: decisions are made using a limited set of features that are highly uncorrelated with protected classes, that is, groups of people protected by laws or policies. Demographic parity: outcomes are proportionally equal for all protected classes. Equal opportunity: true-positive rates are equal for each protected class. Equal odds: true-positive and false-positive rates are equal for each protected class. Validators then need to ascertain whether developers have taken the necessary steps to ensure fairness. Models can be tested for fairness and, if necessary, corrected at each stage of the model-development process, from the design phase through to performance monitoring.

Feature engineering : is often much more complex in the development of machine-learning models than in traditional models. There are three reasons why. First, machine-learning models can incorporate a significantly larger number of inputs. Second, unstructured data sources such as natural language require feature engineering as a preprocessing step before the training process can begin. Third, increasing numbers of commercial machine-learning packages now offer so-called AutoML, which generates large numbers of complex features to test many transformations of the data. Models produced using these features run the risk of being unnecessarily complex, contributing to overfitting. For example, one institution built a model using an AutoML platform and found that specific sequences of letters in a product application were predictive of fraud. This was a completely spurious result caused by the algorithm’s maximizing the model’s out-of-sample performance.

In feature engineering, banks have to make a policy decision to mitigate risk. They have to determine the level of support required to establish the conceptual soundness of each feature. The policy may vary according to the model’s application. For example, a highly regulated credit-decision model might require that every individual feature in the model be assessed. For lower-risk models, banks might choose to review the feature-engineering process only: for example, the processes for data transformation and feature exclusion. Validators should then ensure that features and/or the feature-engineering process are consistent with the chosen policy. If each feature is to be tested, three considerations are generally needed: the mathematical transformation of model inputs, the decision criteria for feature selection, and the business rationale. For instance, a bank might decide that there is a good business case for using debt-to-income ratios as a feature in a credit model but not frequency of ATM usage, as this might penalize customers for using an advertised service.

Hyper parameters : Many of the parameters of machine-learning models, such as the depth of trees in a random-forest model or the number of layers in a deep neural network, must be defined before the training process can begin. In other words, their values are not derived from the available data. Rules of thumb, parameters used to solve other problems, or even trial and error are common substitutes. Decisions regarding these kinds of parameters, known as hyper parameters, are often more complex than analogous decisions in statistical modeling. Not surprisingly, a model’s performance and its stability can be sensitive to the hyper parameters selected. For example, banks are increasingly using binary classifiers such as support-vector machines in combination with natural-language processing to help identify potential conduct issues in complaints. The performance of these models and the ability to generalize can be very sensitive to the selected kernel function.Validators should ensure that hyper parameters are chosen as soundly as possible. For some quantitative inputs, as opposed to qualitative inputs, a search algorithm can be used to map the parameter space and identify optimal ranges. In other cases, the best approach to selecting hyperparameters is to combine expert judgment and, where possible, the latest industry practices.

Production readiness : Traditional models are often coded as rules in production systems. Machine-learning models, however, are algorithmic, and therefore require more computation. This requirement is commonly overlooked in the model-development process. Developers build complex predictive models only to discover that the bank’s production systems cannot support them. One US bank spent considerable resources building a deep learning–based model to predict transaction fraud, only to discover it did not meet required latency standards. Validators already assess a range of model risks associated with implementation. However, for machine learning, they will need to expand the scope of this assessment. They will need to estimate the volume of data that will flow through the model, assessing the production-system architecture (for example, graphics-processing units for deep learning), and the runtime required.

Dynamic model calibration : Some classes of machine-learning models modify their parameters dynamically to reflect emerging patterns in the data. This replaces the traditional approach of periodic manual review and model refresh. Examples include reinforcement-learning algorithms or Bayesian methods. The risk is that without sufficient controls, an overemphasis on short-term patterns in the data could harm the model’s performance over time. Banks therefore need to decide when to allow dynamic recalibration. They might conclude that with the right controls in place, it is suitable for some applications, such as algorithmic trading. For others, such as credit decisions, they might require clear proof that dynamic recalibration outperforms static models. With the policy set, validators can evaluate whether dynamic recalibration is appropriate given the intended use of the model, develop a monitoring plan, and ensure that appropriate controls are in place to identify and mitigate risks that might emerge. These might include thresholds that catch material shifts in a model’s health, such as out-of-sample performance measures, and guardrails such as exposure limits or other, predefined values that trigger a manual review.

Banks will need to proceed gradually. The first step is to make sure model inventories include all machine learning–based models in use. One bank’s model risk-management function was certain the organization was not yet using machine-learning models, until it discovered that its recently established innovation function had been busy developing machine-learning models for fraud and cyber security.

From here, validation policies and practices can be modified to address machine-learning-model risks, though initially for a restricted number of model classes. This helps build experience while testing and refining the new policies and practices. Considerable time will be needed to monitor a model’s performance and finely tune the new practices. But over time banks will be able to apply them to the full range of approved machine-learning models, helping companies mitigate risk and gain the confidence to start harnessing the full power of machine learning.

(AIQRATE, A bespoke global AI advisory and consulting firm. A first in its genre, AIQRATE provides strategic AI advisory services and consulting offerings across multiple business segments to enable clients on their AI powered transformation & innovation journey and accentuate their decision making and business performance.

AIQRATE works closely with Boards, CXOs and Senior leaders advising them on navigating their Analytics to AI journey with the art of possible or making them jump start to AI progression with AI@scale approach followed by consulting them on embedding AI as core to business strategy within business functions and augmenting the decision-making process with AI. We have proven bespoke AI advisory services to enable CXO’s and Senior Leaders to curate & design building blocks of AI strategy, embed AI@scale interventions and create AI powered organizations. AIQRATE’s path breaking 50+ AI consulting frameworks, assessments, primers, toolkits and playbooks enable Indian & global enterprises, GCCs, Startups, VC/PE firms, and Academic Institutions enhance business performance and accelerate decision making.

Visit www.aiqrate.ai to experience our AI advisory services & consulting offerings

Related Posts

AIQRATIONS

Emergence of AI Powered Enterprise: Strategic considerations for Leaders

Add Your Heading Text Here

The excitement around artificial intelligence is palpable. It seems that not a day goes by without one of the giants in the industry coming out with a breakthrough application of this technology, or a new nuance is added to the overall body of knowledge. Horizontal and industry-specific use cases of AI abound and there is always something exciting around the corner every single day.

However, with the keen interest from global leaders of multinational corporations, the conversation is shifting towards having a strategic agenda for AI in the enterprise. Business heads are less interested in topical experiments and minuscule productivity gains made in the short term. They are more keen to understand the impact of AI in their areas of work from a long-term standpoint. Perhaps the most important question that they want to see answered is – what will my new AI-enabled enterprise look like? The question is as strategic as it is pertinent. For business leaders, the most important issues are – improving shareholder returns and ensuring a productive workforce – as part of running a sustainable, future-ready business. Artificial intelligence may be the breakout technology of our time, but business leaders are more occupied with trying to understand just how this technology can usher in a new era of their business, how it is expected to upend existing business value chains, unlock new revenue streams, and deliver improved efficiencies in cost outlays. In this article, let us try to answer these questions.

AI is Disrupting Existing Value Chains

Ever since Michael Porter first expounded on the concept in his best-selling book, Competitive Advantage: Creating and Sustaining Superior Performance, the concept of the value chain has gained great currency in the minds of business leaders globally. The idea behind the value chain was to map out the inter linkages between the primary activities that work together to conceptualize and bring a product / service to market (R&D, manufacturing, supply chain, marketing, etc.), as well as the role played by support activities performed by other internal functions (finance, HR, IT etc.). Strategy leaders globally leverage the concept of value chains to improve business planning, identify new possibilities for improving business efficiency and exploit potential areas for new growth.

Now with AI entering the fray, we might see new vistas in the existing value chains of multinational corporations. For instance:

- Manufacturing is becoming heavily augmented by artificial intelligence and robotics. We are seeing these technologies getting a stronger foothold across processes requiring increasing sophistication. Business leaders need to now seriously consider workforce planning for a labor force that consists both human and artificial workers at their manufacturing units. Due attention should also be paid in ensuring that both coexist in a symbiotic and complementary manner.

- Logistics and Delivery are two other areas where we are seeing a steady growth in the use of artificial intelligence. Demand planning and fulfilment through AI has already reached a high level of sophistication at most retailers. Now Amazon – which handles some of the largest and most complex logistics networks in the world – is in advanced stages of bringing in unmanned aerial vehicles (drones) for deliveries through their Amazon Prime Air program. Business leaders expect outcomes to range from increased customer satisfaction (through faster deliveries) and reduction in costs for the delivery process.

- Marketing and Sales are constantly on the forefront for some of the most exciting inventions in AI. One of the most recent and evolved applications of AI is Reactful. A tool developed for eCommerce properties, Reactful helps drive better customer conversions by analyzing the clickstream and digital footprints of people who are on web properties and persuades them into making a purchase. Business leaders need to explore new ideas such as this that can help drive meaningful engagement and top line growth through these new AI-powered tools.

AI is Enabling New Revenue Streams

The second way business leaders are thinking strategically around AI is for its potential to unlock new sources of revenue. Earlier, functions such as internal IT were seen as a cost center. In today’s world, due to the cost and competitive pressure, areas of the business which were traditionally considered to be cost centers are require to reinvent themselves into revenue and profit centers. The expectation from AI is no different. There is a need to justify the investments made in this technology – and find a way for it to unlock new streams of revenue in traditional organizations. Here are two key ways in which business leaders can monetize AI:

- Indirect Monetization is one of the forms of leveraging AI to unlock new revenue streams. It involves embedding AI into traditional business processes with a focus on driving increased revenue. We hear of multiple companies from Amazon to Google that use AI-powered recommendation engines to drive incremental revenue through intelligent recommendations and smarter bundling. The action item for business leaders is to engage stakeholders across the enterprise to identify areas where AI can be deeply ingrained within tech properties to drive incremental revenue.

- Direct Monetization involves directly adding AI as a feature to existing offerings. Examples abound in this area – from Salesforce bringing in Einstein into their platform as an AI-centric service to cloud infrastructure providers such as Amazon and Microsoft adding AI capabilities into their cloud offerings. Business leaders should brainstorm about how AI augments their core value proposition and how it can be added into their existing product stack.

AI is Bringing Improved Efficiencies

The third critical intervention for a new AI-enabled enterprise is bringing to the fore a more cost-effective business. Numerous topical and early-stage experiments with AI have brought interesting success for reducing the total cost of doing business. Now is the time to create a strategic roadmap for these efficiency-led interventions and quantitatively measure their impact to business. Some food for thought for business leaders include:

- Supply Chain Optimization is an area that is ripe for AI-led disruption. With increasing varieties of products and categories and new virtual retailers arriving on the scene, there is a need for companies to reduce their outlay on the network that procures and delivers goods to consumers. One example of AI augmenting the supply chain function comes from Evertracker – a Hamburg-based startup. By leveraging IOT sensors and AI, they help their customers identify weaknesses such as delays and possible shortages early, basing their analysis on internal and external data. Business leaders should scout for solutions such as these that rely on data to identify possible tweaks in the supply chain network that can unlock savings for their enterprises.

- Human Resources is another area where AI-centric solutions can be extremely valuable to drive down the turnaround time for talent acquisition. One such solution is developed by Recualizer – which reduces the need for HR staff to scan through each job application individually. With this tool, talent acquisition teams need to first determine the framework conditions for a job on offer, while leaving the creation of assessment tasks to the artificial intelligence system. The system then communicates the evaluation results and recommends the most suitable candidates for further interview rounds. Business leaders should identify such game-changing solutions that can make their recruitment much more streamlined – especially if they receive a high number of applications.

- The Customer Experience arena also throws up very exciting AI use cases. We have now gone well beyond just bots answering frequently asked questions. Today, AI-enabled systems can also provide personalized guidance to customers that can help organizations level-up on their customer experience, while maintaining a lower cost of delivering that experience. Booking.com is a case in point. Their chatbot helps customers identify interesting activities and events that they can avail of at their travel destinations. Business leaders should explore such applications that provide the double advantage of improving customer experience, while maintaining strong bottom-line performance.

The possibilities for the new AI-enabled enterprises are as exciting as they are varied. The ideas shared are by no means exhaustive, but hopefully seed in interesting ideas for powering improved business performance. Strategy leaders and business heads need to consider how their AI-led businesses can help disrupt their existing value chains for the better, and unlock new ideas for improving bottom-line and top-line performance. This will usher in a new era of the enterprise, enabled by AI.

(AIQRATE, A bespoke global AI advisory and consulting firm. A first in its genre, AIQRATE provides strategic AI advisory services and consulting offerings across multiple business segments to enable clients on their AI powered transformation & innovation journey and accentuate their decision making and business performance.

AIQRATE works closely with Boards, CXOs and Senior leaders advising them on navigating their Analytics to AI journey with the art of possible or making them jump start to AI progression with AI@scale approach followed by consulting them on embedding AI as core to business strategy within business functions and augmenting the decision-making process with AI. We have proven bespoke AI advisory services to enable CXO’s and Senior Leaders to curate & design building blocks of AI strategy, embed AI@scale interventions and create AI powered organizations. AIQRATE’s path breaking 50+ AI consulting frameworks, assessments, primers, toolkits and playbooks enable Indian & global enterprises, GCCs, Startups, SMBs, VC/PE firms, and Academic Institutions enhance business performance and accelerate decision making.

Visit www.aiqrate.ai to experience our AI advisory services & consulting offerings )

Related Posts

AIQRATIONS

Personal Data Sharing & Protection: Strategic relevance from India’s context

Add Your Heading Text Here

India’s Investments in the digital financial infrastructure—known as “India Stack”—have sped up the large-scale digitization of people’s financial lives. As more and more people begin to conduct transactions online, questions have emerged about how to provide millions of customers adequate data protection and privacy while allowing their data to flow throughout the financial system. Data-sharing among financial services providers (FSPs) can enable providers to more efficiently offer a wider range of financial products better tailored to the needs of customers, including low-income customers.

However, it is important to ensure customers understand and consent to how their data are being used. India’s solution to this challenge is account aggregators (AAs). The Reserve Bank of India (RBI) created AAs in 2018 to simplify the consent process for customers. In most open banking regimes, financial information providers (FIPs) and financial information users (FIUs) directly exchange data. This direct model of data exchange—such as between a bank and a credit bureau—offers customers limited control and visibility into what data are being shared and to what end. AAs have been designed to sit between FIPs and FIUs to facilitate data exchange more transparently. Despite their name, AAs are barred from seeing, storing, analyzing, or using customer data. As trusted, impartial intermediaries, they simply manage consent and serve as the pipes through which data flow among FSPs. When a customer gives consent to a provider via the AA, the AA fetches the relevant information from the customer’s financial accounts and sends it via secure channels to the requesting institution. implementation of its policies for consensual data-sharing, including the establishment and operation of AAs. It provides a set of guiding design principles, outlines the technical format of data requests, and specifies the parameters governing the terms of use of requested data. It also specifies how to log consent and data flows.

There are several operational and coordination challenges across these three types of entities: FIPs, FIUs, and AAs. There are also questions around the data-sharing business model of AAs. Since AAs are additional players, they generate costs that must be offset by efficiency gains in the system to mitigate overall cost increases to customers. It remains an open question whether AAs will advance financial inclusion, how they will navigate issues around digital literacy and smartphone access, how the limits of a consent-based model of data protection and privacy play out, what capacity issues will be encountered among regulators and providers, and whether a competitive market of AAs will emerge given that regulations and interoperability arrangements largely define the business.

Account Aggregators (AA’s):

ACCOUNT AGGREGATORS (AAs) is one of the new categories of non banking financial companies (NBFCs) to figure into India Stack—India’s interconnected set of public and nonprofit infrastructure that supports financial services. India Stack has scaled considerably since its creation in 2009, marked by rapid digitization and parallel growth in mobile networks, reliable data connectivity, falling data costs, and continuously increasing smartphone use. Consequently, the creation, storage, use, and analyses of personal data have become increasingly relevant. Following an “open banking “approach, the Reserve Bank of India (RBI) licensed seven AAs in 2018 to address emerging questions around how data can be most effectively leveraged to benefit individuals while ensuring appropriate data protection and privacy, with consent being a key element in this. RBI created AAs to address the challenges posed by the proliferation of data by enabling data-sharing among financial institutions with customer consent. The intent is to provide a method through which customers can consent (or not) to a financial services provider accessing their personal data held by other entities. Providers are interested in these data, in part, because information shared by customers, such as bank statements, will allow providers to better understand customer risk profiles. The hypothesis is that consent-based data-sharing will help poorer customers qualify for a wider range of financial products—and receive financial products better tailored to their needs.

Data Sharing Model : The new perspective:

Paper based data collection is inconvenient , time consuming and costly for customers and providers. Where models for digital-sharing exist, they typically involve transferring data through intermediaries that are not always secure or through specialized agencies that offer little protection for customers. India’s consent-based data-sharing model provides a digital framework that enables individuals to give and withdraw consent on how and how much of their personal data are shared via secure and standardized channels. India’s guiding principles for sharing data with user consent—not only in the financial sector— are outlined in the National Data Sharing and Accessibility Policy (2012) and the Policy for Open Application Programming Interfaces for the Government of India. The Information Technology Act (2000) requires any entity that shares sensitive personal data to obtain consent from the user before the information is shared. The forthcoming Personal Data Protection Bill makes it illegal for institutions to share personal data without consent.

India’s Ministry of Electronics and Information Technology (MeitY) has issued an Electronic Consent Framework to define a comprehensive mechanism to implement policies for consensual data-sharing. It provides a set of guiding design principles, outlines the technical format of the data request, and specifies the parameters governing the terms of use of the data requested. It also specifies how to log both consent and data flows. This “consent artifact” was adopted by RBI, SEBI, IRDAI, and PFRDA. Components of the consent artifact structure include :

- Identifier : Specifies entities involved in the transaction: who is requesting the data, who is granting permission, who is providing the data, and who is recording consent.

- Data : Describes the type of data being accessed and the permissions for use of the data. Three types of permissions are available: view (read only), store, and query (request for specific data). The artifact structure also specifies the data that are being shared, date range for which they are being requested, duration of storage by the consumer, and frequency of access.

- Purpose : Describes end use, for example, to compute a loan offer.

- Log : Contains logs of who asked for consent, whether it was granted or not, and data flows.

- Digital signature : Identifies the digital signature and digital ID user certificate used by the provider to verify the digital signature. This allows providers to share information in encrypted form

The Approach :

THE AA consent based data sharing model mediates the flow of data between producers and users of data, ensuring that sharing data is subject to granular customer consent. AAs manage only the consent and data flow for the benefit of the consumer, mitigating the risk of an FIU pressuring consumers to consent to access to their data in exchange for a product or service. However, AAs, as entities that sit in the middle of this ecosystem, come with additional costs that will affect the viability of the business model and the cost of servicing consumers. FIUs most likely will urge consumers to go directly to an AA to receive fast, efficient, and low-cost services. However, AAs ultimately must market their services directly to the consumer. While AA services are not an easy sell, the rising levels of awareness among Indian consumers that their data are being sold without their consent or knowledge may give rise to the initial wave of adopters. While the AA model is promising, it remains to be seen how and when it will have a direct impact on the financial lives of consumers.

Differences between Personal Data Protection & GDPR ?

There are some major differences between the two.

First, the bill gives India’s central government the power to exempt any government agency from the bill’s requirements. This exemption can be given on grounds related to national security, national sovereignty, and public order.

While the GDPR offers EU member states similar escape clauses, they are tightly regulated by other EU directives. Without these safeguards, India’s bill potentially gives India’s central government the power to access individual data over and above existing Indian laws such as the Information Technology Act of 2000, which dealt with cyber crime and e-commerce.

Second, unlike the GDPR, India’s bill allows the government to order firms to share any of the non personal data they collect with the government. The bill says this is to improve the delivery of government services. But it does not explain how this data will be used, whether it will be shared with other private businesses, or whether any compensation will be paid for the use of this data.

Third, the GDPR does not require businesses to keep EU data within the EU. They can transfer it overseas, so long as they meet conditions such as standard contractual clauses on data protection, codes of conduct, or certification systems that are approved before the transfer.

The Indian bill allows the transfer of some personal data, but sensitive personal data can only be transferred outside India if it meets requirements that are similar to those of the GDPR. What’s more, this data can only be sent outside India to be processed; it cannot be stored outside India. This will create technical issues in delineating between categories of data that have to meet this requirement, and add to businesses’ compliance costs.

Related Posts

AIQRATIONS

AI Strategy: The Epiphany of Digital Transformation

Add Your Heading Text Here

In the past months due to lockdowns and WFH, enterprises have got an epiphany of massive shifts of business and strategic models for staying relevant and solvent. Digital transformation touted as the biggest strategic differentiation and competitive advantages for enterprises faced a quintessential inertia of mass adoption in the legacy based enterprises and remained more on business planning slides than in full implementation. However, Digital Transformation is not about aggregation of exponential technologies and adhoc use cases or stitching alliances with deep tech startups. The underpinning of Digital transformation is AI and how AI strategy has become the foundational aspect of accomplishing digital transformation for enterprises and generating tangible business metrics. But before we get to the significance of AI strategy in digital transformation, we need to understand the core of digital transformation itself. Because digital transformation will look different for every enterprise, it can be hard to pinpoint a definition that applies to all. However, in general terms: we define digital transformation as the integration of core areas of business resulting in fundamental changes to how businesses operate and how they deliver value to customers.

Though, in specific terms digital transformation can take a very interesting shape according to the business moment in question. From a customer’s point of view, “Digital transformation closes the gap between what digital customers already expect and what analog businesses actually deliver.”

Does Digital Transformation really mean bunching exponential technologies? I believe that digital transformation is first and foremost a business transformation. Digital mindset is not only about new age technology, but about curiosity, creativity, problem-solving, empathy, flexibility, decision-making and judgment, among others. Enterprises needs to foster this digital mindset, both within its own boundaries and across the company units. The World Economic Forum lists the top 10 skills needed for the fourth industrial revolution. None of them is totally technical. They are, rather, a combination of important soft skills relevant for the digital revolution. You don’t need to be a technical expert to understand how technology will impact your work. You need to know the foundational aspects, remain open-minded and work with technology mavens. Digital Transformation is more about cultural change that requires enterprises to continually challenge the status quo, experiment often, and get comfortable with failure. The most likely reason for business to undergo digital transformation is the survival & relevance issue. Businesses mostly don’t transform by choice because it is expensive and risky. Businesses go through transformation when they have failed to evolve. Hence its implementation calls for tough decisions like walking away from long-standing business processes that companies were built upon in favor of relatively new practices that are still being defined.

Business Implementation aspects of Digital Transformation

Disruption in digital business implies a more positive and evolving atmosphere, instead of the usual negative undertones that are attached to the word. According to the MIT Center for Digital Business, “Companies that have embraced digital transformation are 26 percent more profitable than their average industry competitors and enjoy a 12 percent higher market valuation.” A lot of startups and enterprises are adopting an evolutionary approach in transforming their business models itself, as part of the digital transformation. According to Mckinsey, One-third of the top 20 firms in industry segments will be disrupted by new competitors within five years.

The various Business Models being adopted in Digital Transformation era are:

- The Subscription Model (Netflix, Dollar Shave Club, Apple Music) Disrupts through “lock-in” by taking a product or service that is traditionally purchased on an ad hoc basis, and locking-in repeat custom by charging a subscription fee for continued access to the product/service

- The Freemium Model (Spotify, LinkedIn, Dropbox) Disrupts through digital sampling, where users pay for a basic service or product with their data or ‘eyeballs’, rather than money, and then charging to upgrade to the full offer. Works where marginal cost for extra units and distribution are lower than advertising revenue or the sale of personal data

- The Free Model (Google, Facebook) Disrupts with an ‘if-you’re-not-paying-for-the-product-you-are-the-product’ model that involves selling personal data or ‘advertising eyeballs’ harvested by offering consumers a ‘free’ product or service that captures their data/attention

- The Marketplace Model (eBay, iTunes, App Store, Uber, Airbnb) Disrupts with the provision of a digital marketplace that brings together buyers and sellers directly, in return for a transaction or placement fee or commission

- The Access-over-Ownership Model (Zipcar, Peer buy) Disrupts by providing temporary access to goods and services traditionally only available through purchase. Includes ‘Sharing Economy’ disruptors, which takes a commission from people monetizing their assets (home, car, capital) by lending them to ‘borrowers’

- The Hypermarket Model (Amazon, Apple) Disrupts by ‘brand bombing’ using sheer market power and scale to crush competition, often by selling below cost price

- The Experience Model (Tesla, Apple) Disrupts by providing a superior experience, for which people are prepared to pay

- The Pyramid Model (Amazon, Microsoft, Dropbox) Disrupts by recruiting an army of resellers and affiliates who are often paid on a commission-only mode

- The On-Demand Model (Uber, Operator, TaskRabbit) Disrupts by monetizing time and selling instant-access at a premium. Includes taking a commission from people with money but no time who pay for goods and services delivered or fulfilled by people with time but no money

- The Ecosystem Model (Apple, Google) Disrupts by selling an interlocking and interdependent suite of products and services that increase in value as more are purchased. Creates consumer dependency

Since Digital Transformation and its manifestation into various business models are being fast adopted by startups, there are providing tough competition to incumbent corporate houses and large enterprises. Though enterprises are also looking forward to digitally transform their enterprise business, the scale and complexity makes it difficult and resource consuming activity. It has imperatively invoked the enterprises to bring certain strategy to counter the cannibalizing effect in the following ways:

- The Block Strategy. Using all means available to inhibit the disruptor. These means can include claiming patent or copyright infringement, erecting regulatory hurdles, and using other legal barriers.

- The Milk Strategy. Extracting the most value possible from vulnerable businesses while preparing for the inevitable disruption

- The Invest in Disruption Model. Actively investing in the disruptive threat, including disruptive technologies, human capabilities, digitized processes, or perhaps acquiring companies with these attributes

- The Disrupt the Current Business Strategy. Launching a new product or service that competes directly with the disruptor, and leveraging inherent strengths such as size, market knowledge, brand, access to capital, and relationships to build the new business

- The Retreat into a Strategic Niche Strategy. Focusing on a profitable niche segment of the core market where disruption is less likely to occur (e.g. travel agents focusing on corporate travel, and complex itineraries, book sellers and publishers focusing on academia niche)

- The Redefine the Core Strategy. Building an entirely new business model, often in an adjacent industry where it is possible to leverage existing knowledge and capabilities (e.g. IBM to consulting, Fujifilm to cosmetics)

- The Exit Strategy. Exiting the business entirely and returning capital to investors, ideally through a sale of the business while value still exists (e.g. MySpace selling itself to Newscorp)

The curious evolution of AI and its relevance in digital transformation

So here’s an interesting question, AI has been around for more than 60 years, then why is it that it is only gaining traction with the advent of digital? The first practical application of such “machine intelligence” was introduced by Alan Turing, British mathematician and WWII code-breaker, in 1950. He even created the Turing test, which is still used today, as a benchmark to determine a machine’s ability to “think” like a human.The biggest differences between AI then and now are Hardware limitations, access to data, and rise of machine learning.

Hardware limitations led to the non-sustenance of AI adoption till late 1990s. There were many instances where the scope and opportunity of AI led transformation was identified and appreciated by implementation saw more difficult circumstances. The field of AI research was founded at a workshop held on the campus of Dartmouth College during the summer of 1956. But Eventually it became obvious that they had grossly underestimated the difficulty of the project due to computer hardware limitations. The U.S. and British Governments stopped funding undirected research into artificial intelligence, leading to years known as an “AI winter”.

In another example, again in 1980, a visionary initiative by the Japanese Government inspired governments and industry to provide AI with billions of dollars, but by the late 80s the investors became disillusioned by the absence of the needed computer power (hardware) and withdrew funding again. Investment and interest in AI boomed in the first decades of the 21st century, when machine learning was successfully applied to many problems in academia and industry due to the presence of powerful computer hardware. Teaming this with the rise in digital, leading to an explosion of data and adoption of data generation in every aspect of business, made it highly convenient for AI to not only be adopted but to evolve to more accurate execution.

The Core of Digital Transformation: AI Strategy

According to McKinsey, by 2023, 85 percent of all digital transformation initiatives will be embedded with AI strategy at its core. Due to radical computational power, near-endless amounts of data, and unprecedented advances in ML algorithms, AI strategy will emerge as the most disruptive business scenario, and its manifestation into various trends that we see and will continue to see, shall drive the digital transformation as we understand it. The following will the future forward scenarios of AI strategy becoming core to digital transformation:

AI’s growing entrenchment: This time, the scale and scope of the surge in attention to AI is much larger than before. For starters, the infrastructure speed, availability, and sheer scale has enabled bolder algorithms to tackle more ambitious problems. Not only is the hardware faster, sometimes augmented by specialized arrays of processors (e.g., GPUs), it is also available in the shape of cloud services , data farms and centers

Geography, societal Impact: AI adoption is reaching institutions outside of the industry. Lawyers will start to grapple with how laws should deal with autonomous vehicles; economists will study AI-driven technological unemployment; sociologists will study the impact of AI-human relationships. This is the world of the future and the new next.

Artificial intelligence will be democratized: As per the results of a recent Forrester study , it was revealed that 58 percent of professionals researching artificial intelligence ,only 12 percent are actually using an AI system. Since AI requires specialized skills or infrastructure to implement, Companies like Facebook have realized this and are already doing all they can to simplify the implementation of AI and make it more accessible. Cloud platforms like Google APIs, Microsoft Azure, AWS are allowing developers to create intelligent apps without having to set up or maintain any other infrastructure.

Niche AI will Grow: By all accounts, 2020 & beyond won’t be for large, general-purpose AI systems. Instead, there will be an explosion of specific, highly niche artificial intelligence adoption cases. These include autonomous vehicles (cars and drones), robotics, bots (consumer-orientated such as Amazon Echo , and industry specific AI (think finance, health, security etc.).

Continued Discourse on AI ethics, security & privacy: Most AI systems are immensely complex sponges that absorb data and process it at tremendous rates. The risks related to AI ethics, security and privacy are real and need to be addressed through consideration and consensus. Sure, it’s unlikely that these problems will be solved in 2020, but as long as the conversation around these topics continues, we can expect at least some headway.

Algorithm Economy: With massive data generation using flywheels, there will be an economy created for algorithms, like a marketplace for algorithms. The engineers, data scientists, organizations, etc. will be sharing algorithms for using the data to extract required information set.

Where is AI Heading in the Digital Road?While much of this is still rudimentary at the moment, we can expect sophisticated AI to significantly impact our everyday lives. Here are four ways AI might affect us in the future:

Humanizing AI: AI will grow beyond a “tool” to fill the role of “co-worker.” Most AI software is too hidden technologically to significantly change the daily experience for the average worker. They exist only in a back end with little interface with humans. But several AI companies combine advanced AI with automation and intelligent interfaces that drastically alter the day to day workflow for workers

Design Thinking & behavioral science in AI: We will witness Divergence from More Powerful Intelligence To More Creative Intelligence. There have already been attempts to make AI engage in creative efforts, such as artwork and music composition. we’ll see more and more artificial intelligence designing artificial intelligence, resulting in many mistakes, plenty of dead ends, and some astonishing successes.

Rise of Cyborgs: As augmented AI is already the mainstream thinking; the future might hold witness to perfect culmination of man-machine augmentation. AI augmented to humans, intelligently handling operations which human cannot do, using neural commands.

AI Oracle : AI might become so connected with every aspect of our lives, processing though every quanta of data from every perspective that it would perfectly know how to raise the overall standard of living for the human race. People would religiously follow its instructions (like we already follow GPS navigations) leading to leading to an equation of dependence closer to devotion.

The Final Word

Digital business transformation is the ultimate challenge in change management. It impacts not only industry structures and strategic positioning, but it affects all levels of an organization (every task, activity, process) and even its extended supply chain. Hence to brace Digital led disruption, one has to embrace AI-led strategy. Organizations that deploy AI strategically will ultimately enjoy advantages ranging from cost reductions and higher productivity to top-line benefits such as increasing revenue and profits, richer customer experiences, and working-capital optimization.

( AIQRATE, A bespoke global AI advisory and consulting firm. A first in its genre, AIQRATE provides strategic AI advisory services and consulting offerings across multiple business segments to enable clients navigate their AI powered transformation, innovation & revival journey and accentuate their decision making and business performance.

AIQRATE works closely with Boards, CXOs and Senior leaders advising them on their Analytics to AI journey construct with the art of possible AI roadmap blended with a jump start approach to AI driven transformation with AI@scale centric strategy; AIQRATE also consults on embedding AI as core to business strategy within business processes & functions and augmenting the overall decision-making capabilities. Our bespoke AI advisory services focus on curating & designing building blocks of AI strategy, embed AI@scale interventions and create AI powered organizations.

AIQRATE’s path breaking 50+ AI consulting frameworks, methodologies, primers, toolkits and playbooks crafted by seasoned and proven AI strategy advisors enable Indian & global enterprises, GCCs, Startups, SMBs, VC/PE firms, and Academic Institutions enhance business performance & ROI and accelerate decision making capability. AIQRATE also provide advisory support to Technology companies, business consulting firms, GCCs, AI pure play outfits on curating discerning AI capabilities, solutions along with differentiated GTM and market development strategies.

Visit www.aiqrate.ai to experience our AI advisory services & consulting offerings. Follow us on Linkedin | Facebook | YouTube | Twitter | Instagram )

Related Posts

AIQRATIONS

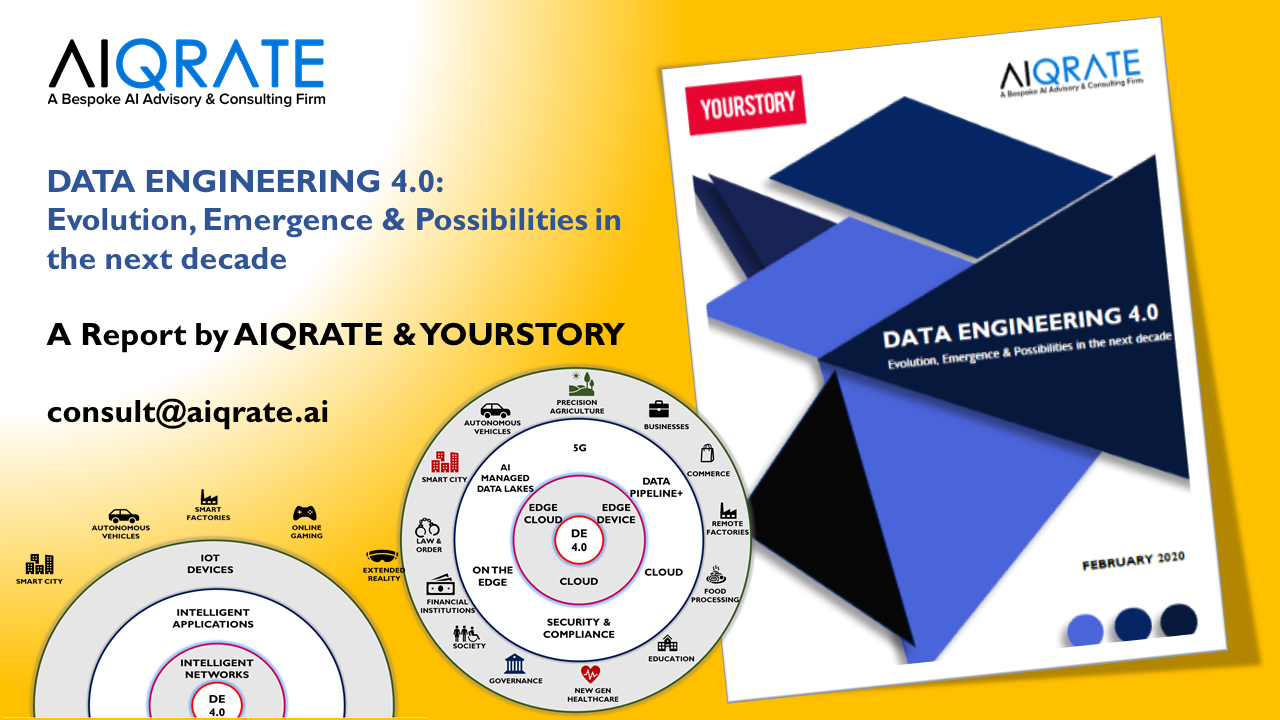

REPORT: Data Engineering 4.0: Evolution, Emergence and Possibilities in the next decade

Add Your Heading Text Here

Today, most technology aficionados think of data engineering as the capabilities associated with traditional data preparation and data integration including data cleansing, data normalization and standardization, data quality, data enrichment, metadata management and data governance. But that definition of data engineering is insufficient to derive and drive new sources of society, business and operational value. The Field of Data Engineering brings together data management (data cleansing, quality, integration, enrichment, governance) and data science (machine learning, deep learning, data lakes, cloud) functions and includes standards, systems design and architectures.

There are two critical economic-based principles that will underpin the field of Data Engineering:

Principle #1: Curated data never depletes, never wears out and can be used an unlimited number of use cases at a near zero marginal cost.

Principle #2: Data assets appreciate, not depreciate, in value the more that they are used; that is, the more these data assets are used, the more accurate, more reliable, more efficient and safer they become.

There have been significant exponential technology advancements in the past few years ; data engineering is the most topical of them. Burgeoning data velocity , data trajectory , data insertion , data mediation & wrangling , data lakes & cloud security & infrastructure have revolutionized the data engineering stream. Data engineering has reinvented itself from being passive data aggregation tools from BI/DW arena to critical to business function. As unprecedented advancements are slated to occur in the next few years, there is a need for additional focus on data engineering. The foundations of AI acceleration is underpinned by robust data engineering capabilities.

YourStory & AIQRATE curated and unveiled a seminal report on “Data Engineering 4.0: Evolution , Emergence & Possibilities in the next decade.” A first in the area , the report covers a broad spectrum on key drivers of growth for Data Engineering 4.0 and highlights the incremental impact of data engineering in the time to come due to emergence of 5G , Quantum Computing & Cloud Infrastructure. The report also covers a comprehensive section on applications across industry segments of smart cities , autonomous vehicles , smart factories and the ensuing adoption of data engineering capabilities in these segments. Further , it dwells on the significance of incubating data engineering capabilities for deep tech startups for gaining competitive edge and enumerates salient examples of data driven companies in India that are leveraging data engineering prowess . The report also touches upon the data legislation and privacy aspects by proposing certain regulations and suggesting revised ones to ensure end to end protection of individual rights , security & safety of the ecosystem. Data Engineering 4.0 will be an overall trojan horse in the exponential technology landscape and much of the adoption acceleration that AI needs to drive ; will be dependent on the advancements in data engineering area.

Please fill in the below details to download the complete report.

Related Posts

AIQRATIONS

Lock in winning AI deals : Strategic recommendations for enterprises & GCCs

Add Your Heading Text Here

Artificial Intelligence is unleashing exciting growth opportunities for the enterprises & GCCs , at the same time , they also present challenges and complexities when sourcing, negotiating and enabling the AI deals . The hype surrounding this rapidly evolving space can make it seem as if AI providers hold the most power at the negotiation table. After all, the market is ripe with narratives from analysts stating that enterprises and GCCs failing to embrace and implement AI swiftly run the risk of losing their competitiveness. With pragmatic approach and acknowledgement of concerns and potential risks, it is possible to negotiate mutually beneficial contracts that are flexible, agile and most importantly, scalable. The following strategic choices will help you lock in winning AI deals :

Understand AI readiness & roadmap and use cases

It can be difficult to predict exactly where and how AI can be used in the future as it is constantly being developed, but creating a readiness roadmap and identifying your reckoner of potential use cases is a must. Enterprise and GCC readiness and roadmap will help guide your sourcing efforts for enterprises and GCCs , so you can find the provider best suited to your needs and able to scale with your business use cases. You must also clearly frame your targeted objectives both in your discussions with vendors as well as in the contract. This includes not only a stated performance objective for the AI , but also a definition of what would constitute failure and the legal consequences thereof.

Understand your service provider’s roadmap and ability to provide AI evolution to steady state

Once you begin discussions with AI vendors & providers, be sure to ask questions about how evolved their capabilities and offerings are and the complexity of data sets that were used to train their system along with the implementation use cases . These discussions can uncover potential business and security risks and help shape the questions the procurement and legal teams should address in the sourcing process. Understanding the service provider’s roadmap will also help you decide whether they will be able to grow and scale with you. Gaining insight into the service provider’s growth plans can uncover how they will benefit from your business and where they stand against their competitors. The cutthroat competition among AI rivals means that early adopter enterprises and GCCs that want to pilot or deploy AI@scale will see more capabilities available at ever-lower prices over time. Always mote that the AI service providers are benefiting significantly from the use cases you bring forward for trial as well as the vast amounts of data being processed in their platforms. These points should be leveraged to negotiate a better deal.

Identify business risk cycles & inherent bias

As with any implementation, it is important to assess the various risks involved. As technologies become increasingly interconnected, entry points for potential data breaches and risk of potential compliance claims from indirect use also increase. What security measures are in place to protect your data and prevent breaches? How will indirect use be measured and enforced from a compliance standpoint? Another risk AI is subject to is unintentional bias from developers and the data being used to train the technology. Unlike traditional systems built on specific logic rules, AI systems deal with statistical truths rather than literal truths. This can make it extremely difficult to prove with complete certainty that the system will work in all cases as expected.

Develop a sourcing and negotiation plan

Using what you gained in the first three steps, develop a sourcing and negotiation plan that focuses on transparency and clearly defined accountability. You should seek to build an agreement that aligns both your enterprise’s and service provider’s roadmaps and addresses data ownership and overall business and security related risks. For the development of AI , the transparency of the algorithm used for AI purposes is essential so that unintended bias can be addressed. Moreover, it is appropriate that these systems are subjected to extensive testing based on appropriate data sets as such systems need to be “trained” to gain equivalence to human decision making. Gaining upfront and ongoing visibility into how the systems will be trained and tested will help you hold the AI provider accountable for potential mishaps resulting from their own erroneous data and help ensure the technology is working as planned.

Develop a deep understanding of your data, IP, commercial aspects

Another major issue with AI is the intellectual property of the data integrated and generated by an AI product. For an artificial intelligence system to become effective, enterprises would likely have to supply an enormous quantity of data and invest considerable human and financial resources to guide its learning. Does the service provider of the artificial intelligence system acquire any rights to such data? Can it use what its artificial intelligence system learned in one company’s use case to benefit its other customers? In extreme cases, this could mean that the experience acquired by a system in one company could benefit its competitors. If AI is powering your business and product, or if you start to sell a product using AI insights, what commercial protections should you have in place?

In the end , do realize the enormous value of your data, participate in AI readiness, maturity workshops and immersion sessions and identification of new and practical AI use cases. All of this is hugely beneficial to the service provider’s success as well and will enable you to strategically source and win the right AI deal.

(AIQRATE advisory & consulting is a bespoke global AI advisory & consulting firm and provides strategic advisory services to boards, CXOs, senior leaders to curate , design building blocks of AI strategy , embed AI@scale interventions & create AI powered enterprises . Visit www.aiqrate.ai , reach out to us at consult@aiqrate.ai )

Related Posts

AIQRATIONS

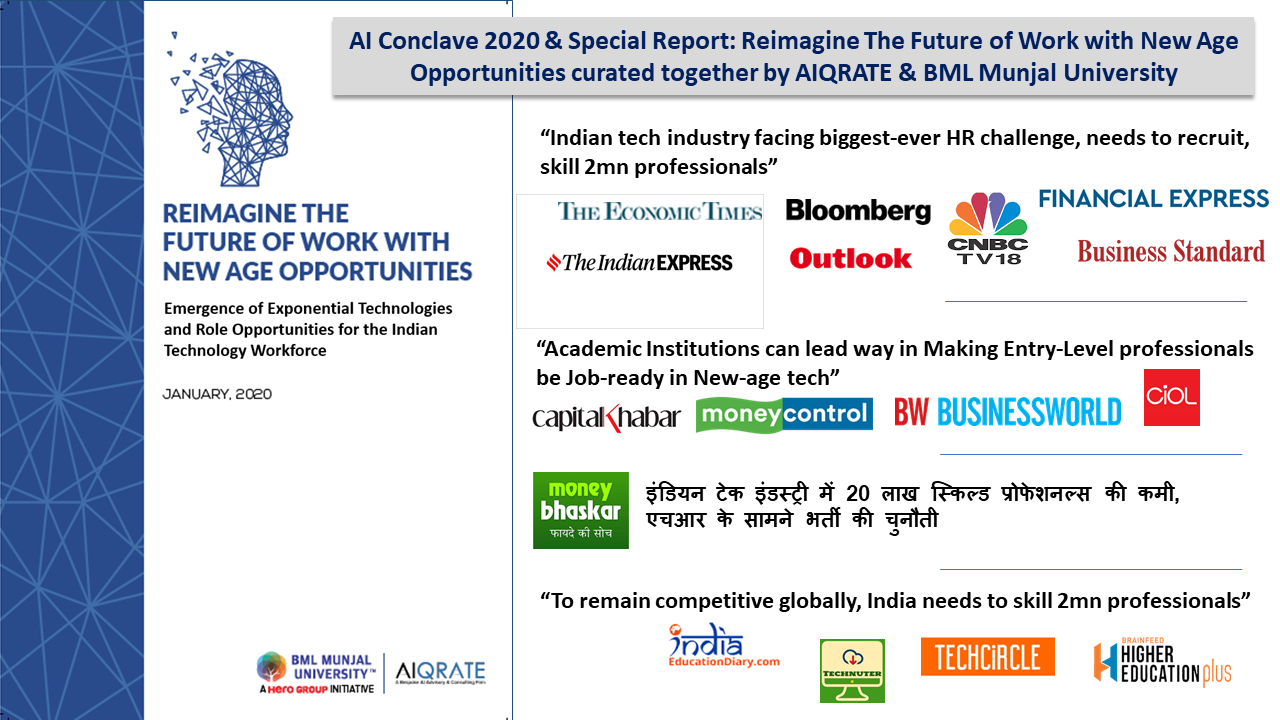

REPORT: Reimagine The Future of Work with New Age Opportunities

Add Your Heading Text Here

The management of talent has always been and continues to be a major challenge for most industries. This is particularly true for knowledge based industries like information technology. The dramatically changing dynamics of the Indian Technology industry compound the challenges and opportunities faced by the industry.

Never since the advent of mass production has an industry seen such dramatic volatility in such short period of time. The revolution before primarily added to the productivity of the labor and moved across the globe. The current revolution is not merely transcending national borders – it is redefining jobs, eliminating others and creating new opportunities.

Please fill in the below details to download the complete report.

Related Posts

AIQRATIONS

AI-Driven Disruption And Transformation: New Business Segments To Novel Market Opportunities

Add Your Heading Text Here

There’s little doubt that Artificial Intelligence (AI) is driving the decisive strategic elements in multiple industries, and algorithms are sitting at the core of every business model and in the enterprise DNA. Conventional wisdom, based on no small amount of research, holds that the rise of AI will usher radical, disruptive changes in the incumbent industries and sectors in the next five to 10 years.

Additionally, it’s never been a better time to launch an AI venture. Investments in AI-focused ventures have grown 1800% in just six years. The rationale behind these numbers comes, in part, from the fact that enterprises expect AI to enable them to move into new business segments, or to maintain a competitive edge in their industry.

Strategists believe this won’t come as a surprise to CXOs and decision-makers as acceleration of AI adoption and proliferation of smart, intuitive and ML algorithms spawn the creation of new industries and business segments and overall, trigger new opportunities for business monetization. However, a few questions loom large for CXOs: How will these new industries and business segments be created with AI? And, what strategic shifts can leadership make to monetize these new business opportunities?

The creation of new industries and business segments depends on dramatic advances in AI that can take a swift adoption journey to move from discovery to commercial application to a new industry. New industry segments around AI are in the making and are far from tapped. A cursory look at new age businesses: Micro-segmented, hyper-personalized online shopping platforms, GPS driven ride-sharing companies, recommendation-driven streaming channels, adaptive learning based EdTech companies, conversational AI-driven new and work scheduling are just a few of the imminent and visible examples. Yet a lot more can be done in this space.

AI adoption brings intentional efforts to adapt to this onslaught of algorithms and how it’s affecting customer and employee behavior. As algorithms become a permanent fixture in everyday life, organizations are forced to update legacy technology strategies and supporting methodologies to better reflect how the real world is evolving. And the need to do so is becoming increasingly obligatory.

On the other side, traditional and incumbent enterprises are reverse engineering investments, processes, and systems to better align with how markets are changing. Because it’s focusing on customer behavior, AI is actually in its own way, making businesses more human. As such, Artificial Intelligence is not specifically about technology, it’s empowered by it. Without an end in mind, self-learning algorithms continually seek out how to use technology in ways that improve customer experiences and relationships. It also represents an effort that introduces new models for business and, equally, creates a way of staying in business as customers become increasingly aware and selective.

Today, AI expertise is focused more on developing commercial applications that optimize efficiencies in existing industries and is focused less on developing patented algorithms that could lead to new industries. These efficiencies are accelerating the sectoral consolidation and convergence, and are less about new industry creation.

However, AI’s most potent, long-term economic use may just be to augment the discovery and pursuit of solving large, complex and unresolved problems that could be the foundations of new industry segments. Enterprises have started realizing the significance of having a long-term strategic interest and investments in using AI in this way. Yet few of the above mentioned examples are testimony to AI triggering new industry segments and business opportunities. The real winners in the algorithm-driven economy will be business leaders that align their strategies to augment AI expertise from ground zero, keep a continuous tab on blockbuster algorithms, and redefine new business segments that enable monetization of new opportunities.

AI has immense potential to jumpstart the creation of new industries and the disruption of existing ones. The curation of this as a strategic roadmap for business leaders is far from easy, but it carries great rewards for businesses. It takes a village to bring about change, and it also takes the spark and perseverance of an AI strategist to spot important trends and create a sense of urgency around new possibilities.

Related Posts

AIQRATIONS

Reimagining the future of travel and hospitality with artificial intelligence

Add Your Heading Text Here

Over the years, the influence of artificial intelligence (AI) has spread to almost every aspect of the travel and the hospitality industry. Thirty percent of hospitality businesses use AI to augment at least one of their primary sales processes, and most customer personalisation is done using AI. The proliferation of AI in the travel and hospitality industry can be credited to the humongous amount of data being generated today. AI helps analyse data from obvious sources, brings value in assimilating patterns in image, voice, video, and text, and turns it into meaningful and actionable insights for decision making. Trends, outliers, and patterns are figured out using machine learning-based algorithms that help in guiding a travel or hospitality company to make informed decisions.

“Discounts, schemes, tour packages, and seasons and travellers to target are formulated using this intelligent data combined with behavioural science and social media attribution to know customers behaviour and insights. “

Let’s take a close look at the AI-driven application areas in the travel and hospitality industry and the impact on the ensuing business value chain:

Bespoke and curated experiences

There are always a few trailblazers who are up for a new challenge and adopt new-age exponential technologies. Many hotel chains have started using an AI concierge. One great example of an AI concierge is Hilton World wide’s Connie, the first true AI-powered concierge bot. Connie stands at two feet high and guests can interact with it during their check-in. Connie is powered by IBM’s Watson AI and uses the Way Blazer travel database. It can provide succinct information to guests on local attractions, places to visit, etc. Being AI-driven with self-learning ability, it can learn and adapt and respond to each guest on personalised basis.

In the travel business, Mezi, using AI with Natural Language Processing technique, provides a personalised experience to business travellers, who usually are strapped for time. It talks about bringing on a concept of bleisure (business+leisure) to address the needs of the workforce. The company’s research shows that 84 percent of business travellers return feeling frustrated, burnt out, and unmotivated. The kind of tedious and monotonous planning that goes into the travel booking could be the reason for it. With AI and NLP, Mezi collects individual preferences and generates personalised suggestions so that a bespoke and streamlined experience is given and the issues faced are addressed properly.

Intelligent travel search

Increased productivity now begins with the search for the hotel, and sophisticated AI usage has paved the way for the customer to access more data than ever before. Booking sites like Lola.com provides on-demand travel services and have developed algorithms that can not only instantly connect people to their team of travel agents who find and book flights, hotels, and cars, but have been able to empower their agents with tremendous technology to make research and decisions an easy process.

Intelligent travel assistants

Chatbot technology is another big strand of AI, and not surprisingly, many travel brands have already launched their own versions in the past year or so. Skyscanner is just one example, creating an intelligent bot to help consumers find flights in Facebook Messenger. Users can also use it to request travel recommendations and random suggestions. Unlike ecommerce or retail brands using chatbots, which can appear gimmicky, there is an argument that examples like Skyscanner are much more relevant and useful for everyday consumers. After all, with the arrival of many more travel search websites, consumers are being overwhelmed by choice – not necessarily helped by it. Consequently, a chatbot like Skyscanner is able to cut through the noise, connecting with consumers in their own time and in the social media spaces they most frequently visit.

Recently, Aero Mexico started using Facebook Messenger chatbot to answer very generic customer questions. The main idea was to cater to 80 percent of questions, which are usually repeat ones and about common topics. Thus, AI is of great application to avoid a repetitive process. Airlines hugely benefit from this. KLM Royal Dutch Airlines uses AI to respond to the queries of customers on Twitter and Facebook. It uses an algorithm from a company called Digital Genius, which is trained on 60,000 questions and answers. Not only this, Deutsche Lufthansa’s bot Mildred can help in searching the cheapest fares.

Intelligent recommendations

International hotel search engine Trivago acquired Hamburg, Germany machine learning startup Tripl as it ramps up its product with recommendation and personalisation technology, giving them a customer-centric approach. The AI algorithm gives tailored travel recommendations by identifying trends in users’ social media activities and comparing it with in-app data of like-minded users. With its launch, users could sign up only through Facebook, potentially sharing oodles of profile information such as friends, relationship status, hometown, and birthdays.

Persona-based travel recommendations, use of customised pictures and text are now gaining ground to entice travel. KePSLA’s travel recommendation platform is one of the first in the world to do this by using deep learning and NLP solutions. With 81 percent of people believing that intelligent machines would be better at handling data than humans, there is also a certain level of confidence in this area from consumers.

Knowing your traveller

Dorchester Collection is another hotel chain to make use of AI. However, instead of using it to provide a front-of-house service, it has adopted it to interpret and analyse customer behaviour deeply in the form of raw data. Partnering with technology company, Richey TX, Dorchester Collection has helped to develop an AI platform called Metis.

Delving into swathes of customer feedback such as surveys and reviews (which would take an inordinate amount of time to manually find and analyse), it is able to measure performance and instantly discover what really matters to guests. Métis helped Dorchester to discover that breakfast it not merely an expectation – but something guests place huge importance on. As a result, the hotels began to think about how they could enhance and personalise the breakfast experience.

Intelligent forecasting: flight fares and hotel tariffs

Flight fares and hotel tariffs are dynamic and vary on real-time basis, depending on the provider. No one has time to track all those changes manually. Thus, intelligent algorithms that monitor and send out timely alerts with hot deals are currently in high demand in the travel industry.

Trivago and Make my trip are screening through swamp of data points, variables, and demand and supply patterns to recommend optimised travel and hotel prices. The AltexSoft data science team has built such an innovative fare predictor tool for one of their clients, a global online travel agency, Fareboom.com. Working on its core product, a digital travel booking website, they could access and collect historical data about millions of fare searches going back several years. Armed with such information, they created a self-learning algorithm, capable of predicting future price movements based on a number of factors, such as seasonal trends, demand growth, airlines special offers, and deals.

Optimised disruption management: delays and cancellations

While the previous case is focused mostly on planning trips and helping users navigate most common issues while traveling, automated disruption management is somewhat different. It aims at resolving actual problems a traveller might face on his/her way to a destination point. Mostly applied to business and corporate travel, disruption management is always a time-sensitive task, requiring instant response.

While the chances of getting impacted by a storm or a volcano eruption are very small, the risk of a travel disruption is still quite high: there are thousands of delays and several hundreds of cancelled flights every day. With the recent advances in AI, it became possible to predict such disruptions and efficiently mitigate the loss for both the traveller and the carrier. The 4site tool, built by Cornerstone Information Systems, aims to enhance the efficiency of enterprise travel.

The product caters to travellers, travel management companies, and enterprise clients, providing a unique set of features for real-time travel disruption management. In an instance, if there is a heavy snowfall at your destination point and all flights are redirected to another airport, a smart assistant can check for available hotels there or book a transfer from your actual place of arrival to your initial destination.

Not only are passengers are affected by travel disruptions; airlines bear significant losses every time a flight is cancelled or delayed. Thus, Amadeus, one of the leading global distribution systems (GDS), has introduced a Schedule Recovery system, aiming to help airlines mitigate the risks of travel disruption. The tool helps airlines instantly address and efficiently handle any threats and disruptions in their operations.

Future potential: So, reflecting on the above-mentioned use cases of the travel and hospitality industry leveraging Ai to a large extent, there will be few latent potential areas in the industry that will embrace AI in the future :

“Undoubtedly, we will witness many travel and hospitality organisations using AI for intelligent recommendations as well as launching their own chatbots. There’s already been a suggestion that Expedia is next in line, but it is reportedly set to focus on business travel rather than holidaymakers.”