Add Your Heading Text Here

Data Sciences and analytics technology can reap huge benefits to both individuals and organizations – bringing personalized service, detection of fraud and abuse, efficient use of resources and prevention of failure or accident. So why are there questions being raised about the ethics of analytics, and its superset, Data Sciences?

Ethical Business Processes in Analytics Industry

At its core, an organization is “just people” and so are its customers and stakeholders. It will be individuals who choose what to organization does or does not do and individuals who will judge its appropriateness. As an individual, our perspective is formed from our experience and the opinions of those we respect. Not surprisingly, different people will have different opinions on what is appropriate use of Data Sciences and analytics technology particularly – so who decides which is “right”? Customers and stakeholders may have different opinions on to the organization about what is ethical.

This suggests that organizations should be thoughtful in their use of this Analytics; consulting widely and forming policies that record the decisions and conclusions they have come to. They will consider the wider implications of their activities including:

Context – For what purpose was the data originally surrendered? For what purpose is the data now being used? How far removed from the original context is its new use? Is this appropriate?

Consent & Choice – What are the choices given to an affected party? Do they know they are making a choice? Do they really understand what they are agreeing to? Do they really have an opportunity to decline? What alternatives are offered?

Reasonable – Is the depth and breadth of the data used and the relationships derived reasonable for the application it is used for?

Substantiated – Are the sources of data used appropriate, authoritative, complete and timely for the application?

Owned – Who owns the resulting insight? What are their responsibilities towards it in terms of its protection and the obligation to act?

Fair – How equitable are the results of the application to all parties? Is everyone properly compensated? Considered – What are the consequences of the data collection and analysis?

Access – What access to data is given to the data subject?

Accountable – How are mistakes and unintended consequences detected and repaired? Can the interested parties check the results that affect them?

Together these facets are called the ethical awareness framework. This framework was developed by the UK and Ireland Technical Consultancy Group (TCG) to help people to develop ethical policies for their use of analytics and Data Sciences. Examples of good and bad practices are emerging in the industry and in time they will guide regulation and legislation. The choices we make, as practitioners will ultimately determine the level of legislation imposed around the technology and our subsequent freedom to pioneer in this exciting emerging technical area.

Designing Digital Business for Transparency and Trust

With the explosion of digital technologies, companies are sweeping up vast quantities of data about consumers’ activities, both online and off. Feeding this trend are new smart, connected products—from fitness trackers to home systems—that gather and transmit detailed information.

Though some companies are open about their data practices, most prefer to keep consumers in the dark, choose control over sharing, and ask for forgiveness rather than permission. It’s also not unusual for companies to quietly collect personal data they have no immediate use for, reasoning that it might be valuable someday.

In a future in which customer data will be a growing source of competitive advantage, gaining consumers’ confidence will be key. Companies that are transparent about the information they gather, give customers control of their personal data, and offer fair value in return for it will be trusted and will earn ongoing and even expanded access. Those that conceal how they use personal data and fail to provide value for it stand to lose customers’ goodwill—and their business.

At the same time, consumers appreciate that data sharing can lead to products and services that make their lives easier and more entertaining, educate them, and save them money. Neither companies nor their customers want to turn back the clock on these technologies—and indeed the development and adoption of products that leverage personal data continue to soar. The consultancy Gartner estimates that nearly 5 billion connected “things” will be in use in 2015—up 30% from 2014—and that the number will quintuple by 2020.

Resolving this tension will require companies and policy makers to move the data privacy discussion beyond advertising use and the simplistic notion that aggressive data collection is bad. We believe the answer is more nuanced guidance—specifically, guidelines that align the interests of companies and their customers, and ensure that both parties benefit from personal data collection

Understanding the “Privacy Sensitiveness” of Customer Data

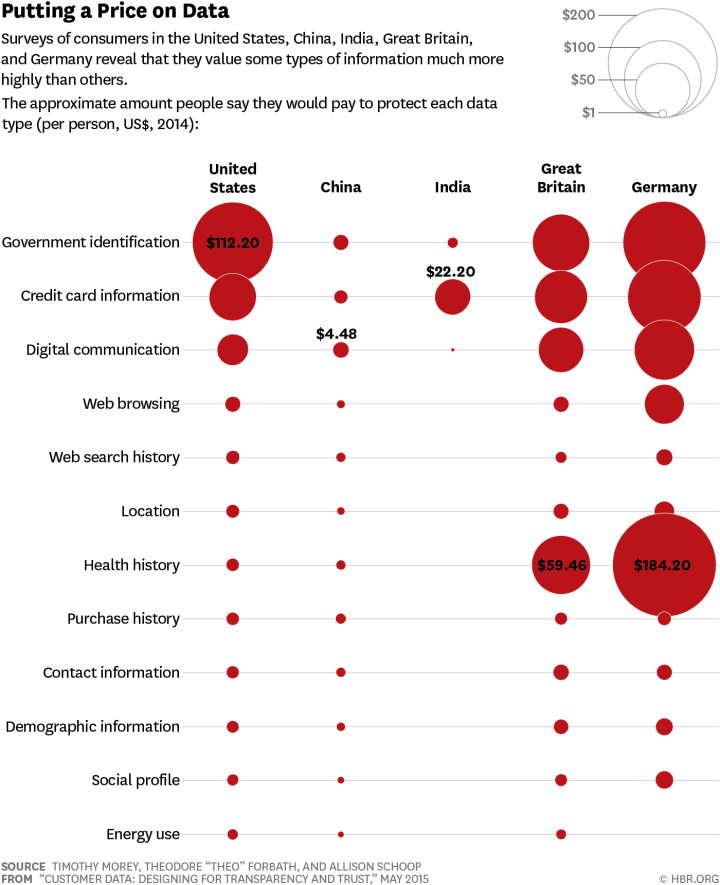

Keeping track of the “privacy sensitiveness” of customer data is also crucial as data and its privacy are not perfectly black and white. Some forms of data tend to be more crucial for customers to protect and maintained private. To see how much consumers valued their data , a conjoint analysis was performed to determine what amount survey participants would be willing to pay to protect different types of information. Though the value assigned varied widely among individuals, we are able to determine, in effect, a median, by country, for each data type.

The responses revealed significant differences from country to country and from one type of data to another. Germans, for instance, place the most value on their personal data, and Chinese and Indians the least, with British and American respondents falling in the middle. Government identification, health, and credit card information tended to be the most highly valued across countries, and location and demographic information among the least.

This spectrum doesn’t represents a “maturity model,” in which attitudes in a country predictably shift in a given direction over time (say, from less privacy conscious to more). Rather, our findings reflect fundamental dissimilarities among cultures. The cultures of India and China, for example, are considered more hierarchical and collectivist, while Germany, the United States, and the United Kingdom are more individualistic, which may account for their citizens’ stronger feelings about personal information.

Adopting Data Aggregation Paradigm for Protecting Privacy

If companies want to protect their users and data they need to be sure to only collect what’s truly necessary. An abundance of data doesn’t necessarily mean that there is an abundance of useable data. Keeping data collection concise and deliberate is key. Relevant data must be held in high regard in order to protect privacy.

It’s also important to keep data aggregated in order to protect privacy and instill transparency. Algorithms are currently being used for everything from machine thinking and autonomous cars, to data science and predictive analytics. The algorithms used for data collection allow companies to see very specific patterns and behavior in consumers all while keeping their identities safe.

One way companies can harness this power while heeding privacy worries is to aggregate their data…if the data shows 50 people following a particular shopping pattern, stop there and act on that data rather than mining further and potentially exposing individual behavior.

Things are getting very interesting…Google, Facebook, Amazon, and Microsoft take the most private information and also have the most responsibility. Because they understand data so well, companies like Google typically have the strongest parameters in place for analyzing and protecting the data they collect.

Finally, Analyze the Analysts

Analytics will increasingly play a significant role in the integrated and global industries today, where individual decisions of analytics professionals may impact the decision making at the highest levels unimagined years ago. There’s a substantial risk at hand in case of a wrong, misjudged model / analysis / statistics that can jeopardize the proper functioning of an organization.

Instruction, rules and supervisions are essential but that alone cannot prevent lapses. Given all this, it is imperative that Ethics should be deeply ingrained in the analytics curriculum today. I believe, that some of the tenets of this code of ethics and standards in analytics and data science should be:

- These ethical benchmarks should be regardless of job title, cultural differences, or local laws.

- Places integrity of analytics profession above own interests

- Maintains governance & standards mechanism that data scientists adhere to

- Maintain and develop professional competence

- Top managers create a strong culture of analytics ethics at their firms, which must filter throughout their entire analytics organization